Artificial Intelligence is a term that covers a broad range of tools and methodologies. We all interact with AI to a greater or lesser extent, from mundane activities such as Siri choosing our music to deadly serious applications in health or transportation. Our use of AI increases with every passing year, but there is a perception that as it becomes ever more advanced, so people have less understanding of how it works.

This disconnect is a dangerous one. If man and machine are to work together, there needs to be trust. But also, there needs to be auditable proof that the AI is achieving what it set out to achieve, just as there does with any business processes. If there is imperfect understanding of what the AI does and how it does it, achieving either of these becomes impossible. It would be a little like accepting “because I said so” as an explanation.

Going down the rabbit hole with NLP and Knowledge Graphs

Explainable AI is all about breaking down these boundaries. There are two specific tools that come to the fore here, and these are Natural Language Processing (NLP) and Knowledge Graphs. In NLP, an AI system processes data that is expressed in normal human language. A knowledge graph then shows how it connects these data nodes.

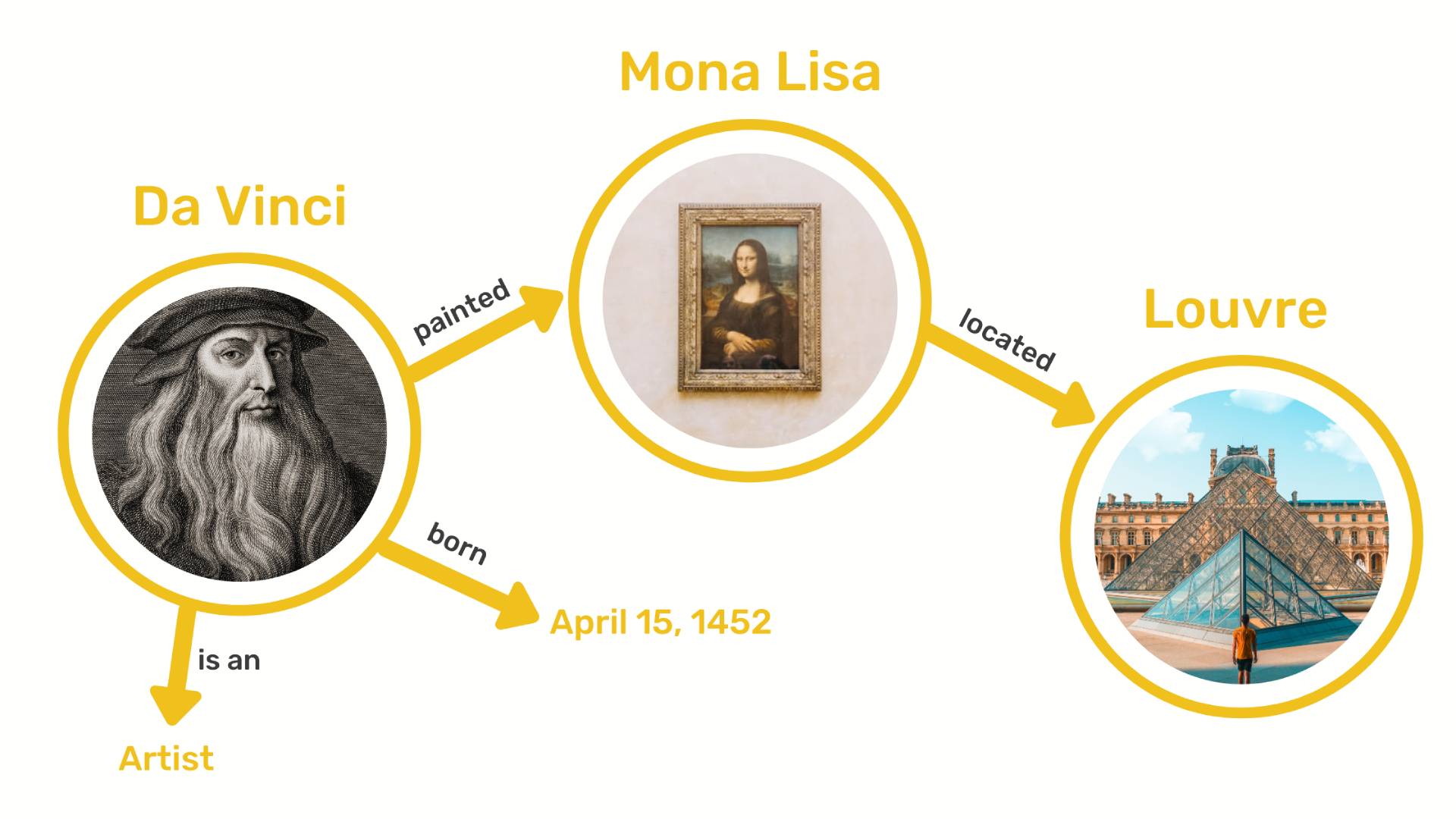

Let’s look at a simple example. An AI system might look at the Wikipedia page for Leonardo Da Vinci. Here are three facts about him:

- He was an artist

- He painted the Mona Lisa

- He was born in 1452

On a Knowledge Graph, the Leonardo Da Vinci node would connect to the Artist, 1452 and Mona Lisa nodes. But he is not the only artist. As the AI learned more, this node would also connect to John Constable, Tracey Emin and others. Likewise the Mona Lisa node would also connect to the Louvre node, the Tracey Emin node would connect to her birthplace of Croydon, and so on.

So a Knowledge Graph is really more of a road map. It provides step by step connections to demonstrate an AI system’s reasoning.

Explainable AI in action

Sprout.ai has gone beyond the theoretical by putting explainable AI into action with its automated policy coverage check tool. This practical application of NLP and Knowledge Graphs is something entirely new in the industry, and it represents an important stage in the evolution of AI.

Make no mistake, AI is one of the hottest tech topics. It is one that is going to touch every industry, not just insurance. Yet while some people are talking about self-driving cars and machines performing heart surgery, others are still arguing about syntax and language. Explainable AI helps clear the path for AI to progress over the next decade in a way that is understandable, trustworthy and auditable.

Sprout.ai leading the way

At Sprout.ai we are excited and privileged to be at forefront of Explainable AI innovation. Our position gives a competitive edge both to ourselves and to the clients we serve. To remain there, we are constantly pushing boundaries in a range of areas, OCR being a prime example.

We know that you don’t remain at the front by standing still, and are excited to welcome new expertise on board to both enhance and challenge our thinking. We were delighted to welcome several new team members earlier this year. Most recently, they have been joined by Ivan, who has extensive Kaggle experience.

2020 will be remembered as a challenging year. But in the world of Explainable AI it has also been a watershed one. Yet this is only the beginning and there are still more exciting times ahead.